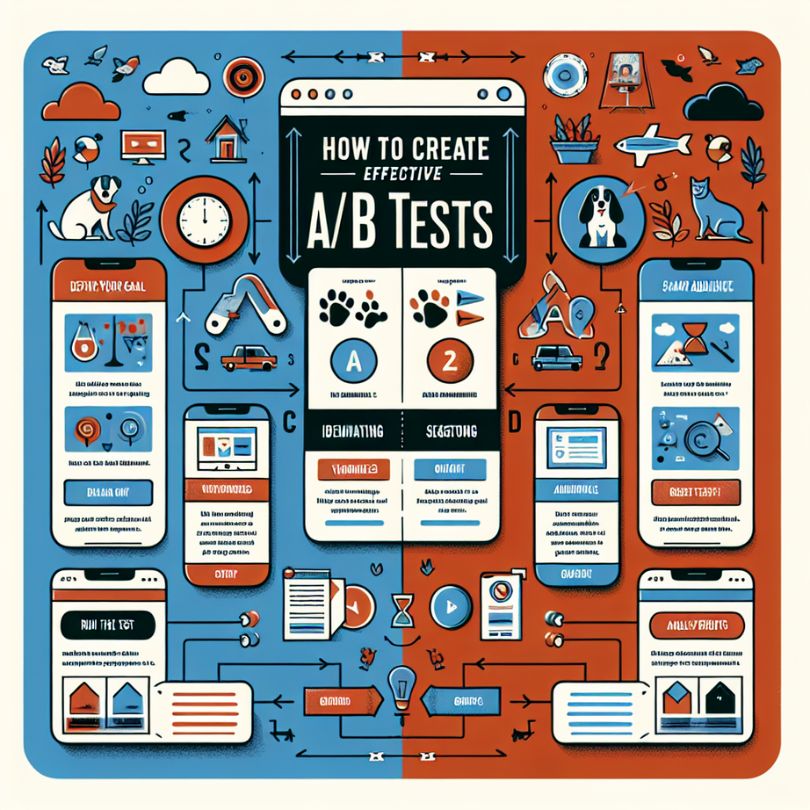

How to Create Effective A/B Tests: Complete Guide for Better Conversions

Creating effective A/B tests is essential for any business looking to optimize their digital marketing campaigns and improve conversion rates. A/B testing, also known as split testing, allows you to compare two versions of a webpage, email, or advertisement to determine which performs better with your target audience.

Understanding the fundamentals of A/B testing can significantly impact your marketing success. Whether you’re testing landing pages, email campaigns, or conversion optimization strategies, the principles remain consistent across different platforms and industries. Many successful marketers have leveraged A/B testing to improve their campaigns, similar to how affiliate marketers optimize their promotional strategies.

What Makes an A/B Test Effective?

An effective A/B test goes beyond simply changing colors or button text. It requires strategic planning, proper implementation, and careful analysis of results. The most successful tests focus on elements that directly impact user behavior and conversion rates.

Key Components of Effective A/B Tests:

- Clear hypothesis and objectives

- Statistical significance

- Adequate sample size

- Proper test duration

- Single variable testing

Step-by-Step Guide to Creating A/B Tests

Step 1: Define Your Hypothesis

Before launching any test, establish a clear hypothesis. Your hypothesis should be specific and measurable. For example: “Changing the call-to-action button from blue to red will increase click-through rates by 15%.” This approach ensures you’re testing with purpose rather than making random changes.

Step 2: Identify Key Metrics

Determine which metrics you’ll use to measure success. Common metrics include conversion rates, click-through rates, bounce rates, and revenue per visitor. Choose metrics that align with your business objectives and can provide actionable insights.

Step 3: Select Your Testing Platform

Choose a reliable A/B testing platform that can handle your traffic volume and provide accurate results. Popular options include Google Optimize, Optimizely, and VWO. Consider factors like ease of use, integration capabilities, and reporting features when making your selection.

Step 4: Design Your Variations

Create your test variations based on your hypothesis. Focus on testing one element at a time to ensure clear results. Whether you’re testing headlines, images, or entire page layouts, make sure the changes are significant enough to potentially impact user behavior. This systematic approach is crucial for successful marketing campaigns across various industries.

Best Practices for A/B Testing Success

Sample Size and Statistical Significance

Ensure your test reaches statistical significance before drawing conclusions. A common mistake is ending tests too early, leading to unreliable results. Use sample size calculators to determine how long your test should run and how many visitors you need for reliable results.

Test Duration Guidelines

Run tests for at least one full business cycle to account for variations in user behavior throughout the week. Avoid stopping tests during weekends or holidays unless that’s specifically what you’re testing. Most experts recommend running tests for a minimum of two weeks to capture different user patterns.

Avoiding Common Pitfalls

Several common mistakes can invalidate your A/B test results:

- Testing multiple variables simultaneously

- Stopping tests too early

- Ignoring external factors

- Not segmenting results properly

- Making changes during the test period

Advanced A/B Testing Strategies

Multivariate Testing

Once you’ve mastered basic A/B testing, consider multivariate testing for more complex scenarios. This approach allows you to test multiple elements simultaneously, providing insights into how different combinations perform together.

Personalization and Segmentation

Segment your test results by different user groups to uncover valuable insights. Different demographics, traffic sources, or device types may respond differently to your variations. This granular analysis can reveal optimization opportunities you might otherwise miss.

Sequential Testing

Implement a systematic approach to testing by prioritizing elements based on potential impact. Start with high-impact areas like headlines and call-to-action buttons before moving to smaller elements like font sizes or colors.

Measuring and Analyzing Results

Key Performance Indicators

Track both primary and secondary metrics to get a complete picture of your test performance. While your primary metric might be conversion rate, secondary metrics like time on page, bounce rate, and user engagement can provide valuable context for your results.

Statistical Analysis

Understand confidence intervals and p-values to properly interpret your results. A 95% confidence level is standard for most A/B tests, meaning you can be 95% confident that your results aren’t due to random chance.

Implementation and Optimization

Rolling Out Winners

When you identify a winning variation, implement it carefully. Monitor performance after implementation to ensure the results hold up over time. Sometimes, novelty effects can cause temporary improvements that don’t sustain long-term.

Continuous Improvement

A/B testing should be an ongoing process, not a one-time activity. Create a testing roadmap that prioritizes high-impact areas and maintains a consistent testing schedule. Regular testing helps you stay ahead of changing user preferences and market conditions.

Tools and Resources for A/B Testing

Successful A/B testing requires the right tools and resources. Beyond traditional testing platforms, consider using heat mapping tools, user session recordings, and analytics platforms to gain deeper insights into user behavior. These tools can help you identify testing opportunities and understand why certain variations perform better than others.

For businesses looking to expand their testing capabilities, exploring advanced analytics solutions can provide additional insights into user behavior and campaign performance. The key is finding tools that integrate well with your existing marketing stack and provide actionable data.

Conclusion

Creating effective A/B tests requires careful planning, proper execution, and thorough analysis. By following the strategies and best practices outlined in this guide, you can develop a systematic approach to optimization that drives meaningful improvements in your conversion rates and overall marketing performance. Remember that successful A/B testing is an iterative process that requires patience, persistence, and a commitment to data-driven decision making. Start with simple tests, learn from your results, and gradually build more sophisticated testing programs that can significantly impact your business success.